The commit reel is defined in section 5 of the GitTorrent RFC.

It is defined as an uncompressed stream of objects, sorted in a particular way. In practice, it is only the commit objects that are sorted, and all of the dependent objects for those commits are placed with the commit which first introduces them.

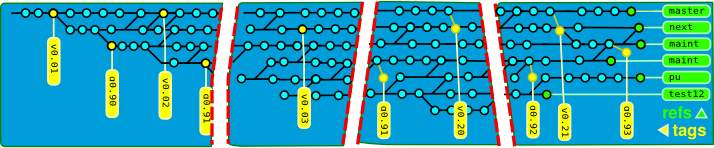

So, you start with a repository:

git rev-list --date-order, as

well as fetching their types and sizes, to produce the commit reel

index.

| SHA1 hash | type | size | info |

|---|---|---|---|

| e951c3b45579 | blob | 971 | lib/VCS/Git/Torrent/Tracker.pm |

| 4a39b387218e | tree | 38 | lib/VCS/Git/Torrent |

| 46a6dd40761e | blob | 1797 | lib/VCS/Git/Torrent.pm |

| cb169dea8427 | tree | 72 | lib/VCS/Git |

| 6856da5de8a8 | tree | 30 | lib/VCS |

| e028c2ec652f | tree | 30 | lib |

| a8c6175cb855 | tree | 30 | |

| 6d669a0d7649 | commit | 177 | |

| d7934d77db6d | blob | 508 | lib/VCS/Git/Torrent/PWP/Message.pm |

| 831a2dce3123 | tree | 38 | lib/VCS/Git/Torrent/PWP |

| b67f62af3325 | blob | 2062 | lib/VCS/Git/Torrent/PWP.pm |

| 8e49bb567004 | tree | 102 | lib/VCS/Git/Torrent |

| d9cfbd2965e1 | tree | 72 | lib/VCS/Git |

| 760c03b92584 | tree | 30 | lib/VCS |

| 58e8231290fa | tree | 30 | lib |

| 08d6743bc1cd | tree | 30 | |

| 6e85df39b2e9 | commit | 233 | |

| ae59d4c6cdad | blob | 239 | t/91-pod-coverage.t |

| … | |||

| 9f21fdc6b232 | commit | 504 | |

| 7ed81b753c34 | blob | 528 | lib/VCS/Git/Torrent/Reference.pm |

| 111a3c708d42 | tree | 321 | lib/VCS/Git/Torrent |

| 32f0b74a2902 | blob | 6311 | lib/VCS/Git/Torrent.pm |

| da591fe54883 | tree | 72 | lib/VCS/Git |

| 7b702d0cf7de | tree | 30 | lib/VCS |

| 39ec1765b517 | tree | 30 | lib |

| 6e5bb34706f6 | tree | 245 | |

| 5e8f6a7807a3 | commit | 277 | |

a commit reel

| Chunk 1 | Chunk 2 | Chunk 3 | Chunk 4 |

|---|---|---|---|

6d669a0d7649 commit 3145 6e85df39b2e9 commit 6250 d16fe9b37f1c commit 7269 b9b5df08c542 commit 10216 9f5380b003fc commit 13715 3d954bf97808 commit 15211 |

53b2a50ab357 commit 64934 f8a02453062d commit 76844 60f7c92ec68f commit 78718 8e4c833bc0ed commit 90027 9595e4d0ed4a commit 99113 2499769d4e5b commit 113780 2b67a6d1898a commit 116380 |

c24dcdcd46de commit 158557 bffe789b4a13 commit 162339 cc77ed21cf03 commit 164454 1dfd53badd66 commit 170494 497da251f9dc commit 174642 |

5b7e980dce4b commit 178961 6c1fd6467f49 commit 183229 ae4aee0f484e commit 187522 69ff2248cf7f commit 191852 40149c3f6e62 commit 199468 93083bfcc5ee commit 202889 4ff65c62c570 commit 209765 76ed2bbc552c commit 214713 9f21fdc6b232 commit 225327 5e8f6a7807a3 commit 233141 |

testpacking.pl script in the VCS::Git::GitTorrent

distribution can generate these lists and show how much bandwidth is

wasted by using 4 separate packs:

$ **git update-ref refs/heads/oldeg 5e8f6a7807a3** $ **perl bin/testpacking.pl -n4 oldeg** Generating index...

Length is 233141, 4 blocks of 58286 each

do_pack(3d954bf97808)

Slice #0 (up to 58286): 15211 => 6554 (43%)

do_pack(2b67a6d1898a 9595e4d0ed4a --not 3d954bf97808)

Slice #1 (up to 116572): 101169 => 30035 (29%)

do_pack(497da251f9dc --not 2b67a6d1898a 9595e4d0ed4a)

Slice #2 (up to 174858): 58262 => 16951 (29%)

do_pack(5e8f6a7807a3 --not 497da251f9dc 9595e4d0ed4a)

Slice #3: 58499 => 10224 (17%)

Overall: 233141 => 63764 (27%)

vs Bundle: 233141 => 58297 (25%)

Overall inefficiency: 9%

$

So what this is saying is that our repository, originally a 58k bundle, can be split into 4 chunks, defined by the listed boundary commits. At the end, you get 4 bundles of varying sizes, with an extra 5k, or 9% of overhead (yes, these packs are thin).

So that’s the idea anyway. To run the above example, you can clone the github repository, and install the requisite modules via CPAN:

$ **git clone git://github.com/samv/vcs-git-torrent.git \

VCS-Git-Torrent** ...

$ **cd VCS-Git-Torrent** $ **perl Makefile.PL** ...

$ **make** ...

$

If it complains about missing modules, install via CPAN:

$ **cpan Test::Depends Bencode IO::Plumbing** ...

Update: Figures for my git.git clone:

arcturus:~/src/git$ time perl ../VCS-Git-Torrent/bin/testpacking.pl -n32 master maint pu

missing fields on Reference at /usr/lib/perl5/Class/MOP/Mixin/AttributeCore.pm line 53

Generating index...

Length is 1104821033, 32 blocks of 34525658 each

do_pack(7e011c40bc6c 466fede1bdfd 76a8323ac7f5)

Slice #0 (up to 34525658): 34518888 => 1909503 (5%)

do_pack(cf1fe88ce1fb b3f041fb0f7d a9572072f0ab fdeb2fb61669 --not 7e011c40bc6c 466fede1bdfd 76a8323ac7f5)

Slice #1 (up to 69051316): 34529558 => 1417850 (4%)

do_pack(38035cf4a51c 1b83ace35e78 50b44eceed21 2326acfa95ac --not cf1fe88ce1fb b3f041fb0f7d a9572072f0ab fdeb2fb61669)

Slice #2 (up to 103576974): 34528221 => 1243468 (3%)

do_pack(c7162c1db6fe b642d9ef6433 ada5853c98c5 --not 38035cf4a51c 1b83ace35e78 f2f880f53707 50b44eceed21 2326acfa95ac cf1fe88ce1fb)

Slice #3 (up to 138102632): 34483917 => 1109044 (3%)

do_pack(f16db173a468 f25b79397c97 61ffbcb98804 8c6ab35efe63 3d234d0afacd efffea033457 53cda8d97e6e da7bad50ed08 c27d205aaefb 96bc4de85cf8 8e27364128b0 a0764cb838c2 b1e9fff7e76c 5faf64cd28bf --not c7162c1db6fe b642d9ef6433 2e1ded44f709 ada5853c98c5 cf1fe88ce1fb)

Slice #4 (up to 172628290): 34429886 => 898299 (2%)

do_pack(a2540023dcf8 3159c8dc2da4 5a03e7f25334 ab41dfbfd4f3 e4fe4b8ef7cd 9c7b0b3fc46e a06f678eb998 d0b353b1a7a2 d0c25035df48 18b0fc1ce1ef 1729fa9878ed 1f24c58724a6 f2b579256475 937a515a15f7 --not f16db173a468 f25b79397c97 61ffbcb98804 8c6ab35efe63 3d234d0afacd efffea033457 53cda8d97e6e da7bad50ed08 c27d205aaefb 96bc4de85cf8 8e27364128b0 a0764cb838c2 b1e9fff7e76c 5faf64cd28bf cf1fe88ce1fb)

...

do_pack(607a9e8aaa9b e39e0d375d1d 106a36509dc7 0e098b6d79fb 14c674e9dc52 43485d3d16e4 7a4ee28f4127 118d938812f3 cc580af88507 86386829d425 3b5ef0e216d2 36e4986f26d1 41fe87fa49cb 9e4b7ab65256 3deffc52d88d b53bb301f578 ad17f01399a9 17635fc90067 375881fa6a43 --not f8b5a8e13cb4 50ff23667020 345a38039414 3f721d1d6d6e 977e289e0d73 2ff4d1ab9ef6 69932bc6117d 1d7b1af42028 fcdd0e92d9d4 754ae192a439 3eb969973335 0cd29a037183 6e0800ef2575 df533f34a318 32d86ca53195 f0cea83f6316 4e65b538acc9 3cb1f9c98203 0eaadfe625fd cf1fe88ce1fb)

Slice #29 (up to 1035769740): 35405510 => 677049 (1%)

do_pack(609621a4ad81 eab58f1e8e5e e7e55483439b 46e09f310567 134748353b2a 500348aa6859 a4ca1465ec8a d23749fe36f1 c8998b4823cb 4d23660e79db ad3f9a71a820 b1a01e1c0762 24ab81ae4d12 c591d5f311e0 9f67d2e8279e 2aae905f23f7 a75d7b54097e 86140d56c150 9bccfcdbff3b 02edd56b84f0 204d363f5a05 7c85d2742978 a5ca8367c223 46148dd7ea41 b7b10385a84c a099469bbcf2 fe0a3cb23c79 6b87ce231d14 1ba447b8dc2e 9fa708dab1cc 1414e5788b85 aa43561ac0c1 63267de2acc1 --not 607a9e8aaa9b e39e0d375d1d 106a36509dc7 30ae47b4cc19 e9c5dcd1313d 51ea55190b6e d5f6a96fa479 0e098b6d79fb 14c674e9dc52 43485d3d16e4 7a4ee28f4127 118d938812f3 cc580af88507 86386829d425 3b5ef0e216d2 36e4986f26d1 41fe87fa49cb 9e4b7ab65256 3deffc52d88d b53bb301f578 ad17f01399a9 17635fc90067 375881fa6a43 3cb1f9c98203 cf1fe88ce1fb)

Slice #30 (up to 1070295398): 34563903 => 641336 (1%)

do_pack(8644f69753e0 --not 609621a4ad81 eab58f1e8e5e e7e55483439b d52dc4b10b2f ebc9d420566d f740cc25298e 492cf3f72f9d 46e09f310567 134748353b2a 500348aa6859 a4ca1465ec8a d23749fe36f1 c8998b4823cb 4d23660e79db ad3f9a71a820 b1a01e1c0762 24ab81ae4d12 c591d5f311e0 9f67d2e8279e 2aae905f23f7 a75d7b54097e 86140d56c150 9bccfcdbff3b 02edd56b84f0 204d363f5a05 7c85d2742978 a5ca8367c223 46148dd7ea41 b7b10385a84c a099469bbcf2 fe0a3cb23c79 6b87ce231d14 1ba447b8dc2e 9fa708dab1cc 1414e5788b85 aa43561ac0c1 63267de2acc1 17635fc90067 cf1fe88ce1fb)

Slice #31: 34528236 => 603576 (1%)

Overall: 1104821033 => 26021211 (2%)

vs Bundle: 1104821033 => 23888867 (2%)

Overall inefficiency: 8%real 16m54.074s

user 4m30.961s

sys 11m9.302s

That’s dividing the pack defined by three branches into 32 generally evenly-sized chunks. Actually the chunks at the beginning are larger than the later ones, which are all between 500kB and 950kB. While they are not perfectly sized, at least they can be generated by any node with the underlying objects, without transferring a binary pack.

However, what will matter is that execution time; the Perl

prototype is needlessly inefficient. With a revision cache, we should

be able to reduce that time drastically and hopefully be able to

retrieve the boundary commits for a given range of commits and number

of chunks in milliseconds; the remaining work is mostly on git

pack-objects, but given we’ve drastically reduced the work it has

to do, the overall load on the network should not be drastically

higher; and because peers can potentially trade these blocks, the

workload can be spread out.